Version 1.2

July 8th, 2021

July 8th, 2021

Quite a bit has changed in the past year, I've moved across the country, the world shifted to a remote world, and much more. This did interrupt the progress of the site, and for that I have to apologize. Thankfully, jfqd over on GitHub called this out in the issue report tool, and I did make the first (albeit small) update to the site in some time.

Mozilla has been working on the

For the Permissions Policy header, we simply rewrote the feature policy header module into that with the same weight. So, if you had updated your header nomenclature, then you should maintain the same score in v1.2. I do have plans to add better CSP and Permissions Policy inspection -- but it is quite difficult since it is hard to define what components of it you should be using without scanning your entire site.

Mozilla has been working on the

Feature-Policy header which we've supported for some time now, but they had renamed this to Permissions-Policy some time ago. Keeping the Feature-Policy module, we removed any weight this header has had. If you do not have it. No worries, no impact to the score, and no output. If you do -- we will kick out a warning to update this to Permissions-Policy (even if you have both).For the Permissions Policy header, we simply rewrote the feature policy header module into that with the same weight. So, if you had updated your header nomenclature, then you should maintain the same score in v1.2. I do have plans to add better CSP and Permissions Policy inspection -- but it is quite difficult since it is hard to define what components of it you should be using without scanning your entire site.

Version 2.0, but not like that...

March 6th, 2020

March 6th, 2020

Our automatic scanning has been a hit, nearly 700,000 sites have been scanned at this point -- and we decided to slow our roll so we can take that amount of data, understand the workflow, and optimize how we work with it. This is causing some noticable performance issues in the scanner itself, but nothing that should be too devastating.

On that note, we stumbled upon the Google Chrome feature Lighthouse. This gave us some really great insight into what portions of the site slow things down. Our big 'ol background picture was ~950kb. Changing that to webp (from jpeg) brought it down closer to ~110kb. Still astronomical, but whatever -- pretty site wins.

Last but not least, we want this site to deliver what we tell others they should -- we should be having ideal headers. The HTTP version is a header, so we did some updates and moved to PHP-FPM (from FCGI), and moved Apache to serve via HTTP/2 (where supported). This should give us some great performance enhancements.

Last but not least, we want this site to deliver what we tell others they should -- we should be having ideal headers. The HTTP version is a header, so we did some updates and moved to PHP-FPM (from FCGI), and moved Apache to serve via HTTP/2 (where supported). This should give us some great performance enhancements.

Yikes...

Turns out this little dip in our score was due to the fact that PHP-FPM uses a different PHP.INI file, one that didn't have the

Scan often!

On that note, we stumbled upon the Google Chrome feature Lighthouse. This gave us some really great insight into what portions of the site slow things down. Our big 'ol background picture was ~950kb. Changing that to webp (from jpeg) brought it down closer to ~110kb. Still astronomical, but whatever -- pretty site wins.

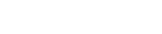

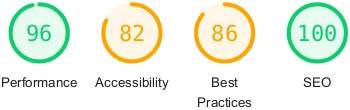

Before...

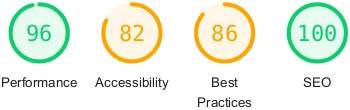

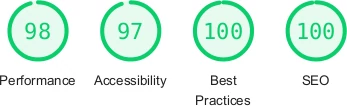

After...

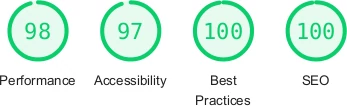

But then this...

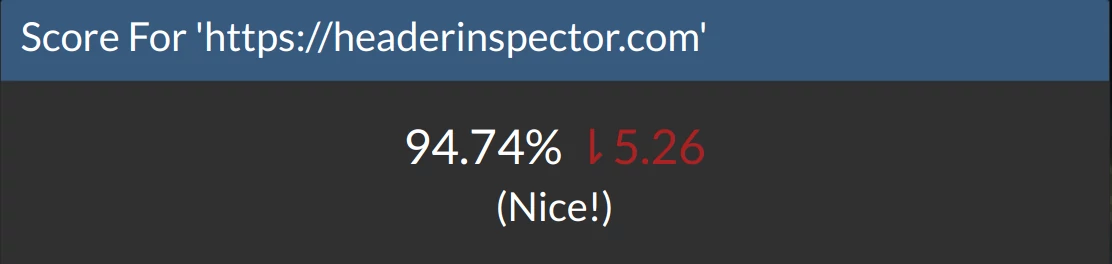

Yikes...

Turns out this little dip in our score was due to the fact that PHP-FPM uses a different PHP.INI file, one that didn't have the

secure and httponly flags applied to the cookies. We fixed that, restarted Apache, and we're back to 100%!Scan often!

Backblaze So Smooth

March 3rd, 2020

March 3rd, 2020

We're big fans of the Backblaze Blog. These folks jump into technical topics to talk about how their systems are designed, and that has inspired these blogs. Today, is a bit more technical. This one won't affect your score, just how we do stuff internally.

First of all, today we hit 200,000 domains scanned! Of course, we're just running through the Alexa Top 1M sites, it's not like I know that many sites -- or have that many friends. But doing this at scale while having a site (on an anemic VPS) still perform isn't the easiest task.

A little history:

First of all, today we hit 200,000 domains scanned! Of course, we're just running through the Alexa Top 1M sites, it's not like I know that many sites -- or have that many friends. But doing this at scale while having a site (on an anemic VPS) still perform isn't the easiest task.

A little history:

- Originally, I only scanned the Moz Top 500 Sites, which was mostly manual (scripting a bunch of calls to the site)

- Was painfully inefficient, since we had to render the entire web page, something we're not consuming

- It was great for 500 sites, one-off... But for 1M sites, it stopped being efficient.

- Running at 100% CPU Util made our site painful to use, but who cares, nobody knew of it yet and it was late at night

- Now it's time to start doing the 1M sites. Let's do it like we did the Top 500... Quickly, this fell out of favor since it wasn't performant.

- Built a queue system, all sites are added to a queue with a default 5/10 priority.

- Normal day-to-day scans are done through the same interface, but are not assigned a priority, they are done instantly.

- I would log into the admin interface, hit "Add to Queue". This would essentially add 5,000 domains from the Alexa list to our queue, after ensuring it wasn't added to the site previously.

- Then, when I was bored, I'd run an "Trigger Executor" process, which would fire off a batch of 60 domains (sequentially, thanks lack of asynchronous functions!). So I'd open up 10 tabs or so, which would make a bunch of executors fire off. I'd do this from a few machines. Eventually, having hundreds of parallel requests would cause my database to freeze.

- Realized that queue system, while functional, only consumed data, not created it. Improved the queue:

- Every minute, a process was called to execute the queue (process sites from the list).

- To ensure race conditions were not hit, each minute a queue executor will grab a "work claim". This marks the domains (up to 60) with the timestamp of the trigger, along with a UUID.

- Subsequent executors will not grab these "tasks". If the tasks run > 5 Minutes, they are assumed to be hung. There's a recovery function for those domains as well.

- Now, I'd have to go in and trigger the add to queue function, which was not optimized at all. This would send three queries per domain (to check if they failed, succeeded, and were already in a queue)

- Set the queue adder to run every two hours (so we'd see 60,000 new domains per day). It worked, but was stupid slow.

- Made a new queue feeder to populate tasks:

- New queue system simply worked down the 1M list using one query per domain. This thing FLEW, and did not require any manual triggers.

- Was STILL slow, despite adding over 250,000 domains to the list in 24 hours.

- After a fix in our

cURLparameters, realized we were logging verbose. We only realized this because our VPS ran completely out of disk space. Our error logs were full of garbage. Trucated error logs, disabled automatic processing, and moved on.

- At this point, we simply stopped the queue adders. We were over 200k down the 1M list, unlikely to be scanning a lot of sites more than once. We simply fed the enter log file into the queue at this point. At this point, we've added a "Top 10" list at the top of the page, removed the "Header Ballpark" functionality (it was boring anyways), and completely populated our queue. Now, we're working through them at ~100/minute.

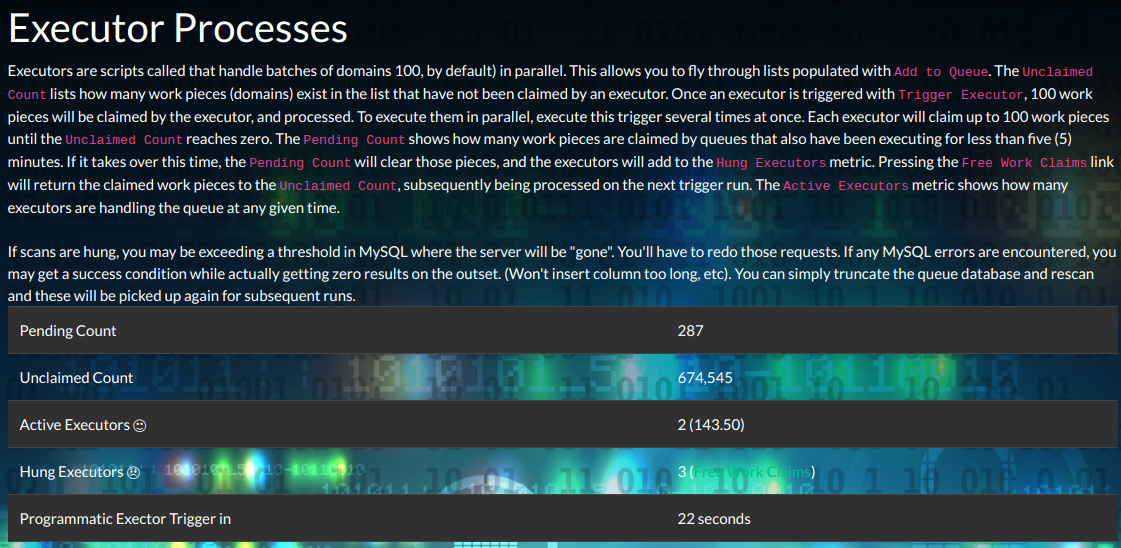

Here's a screen shot of our admin page viewing the exectors. Great chance this gets improved a lot in the future.

Scan often!

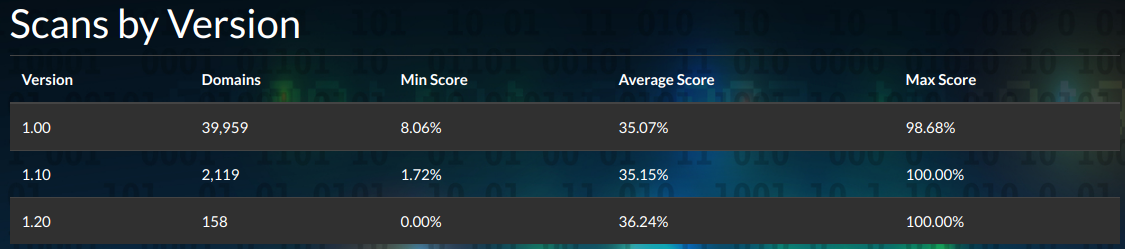

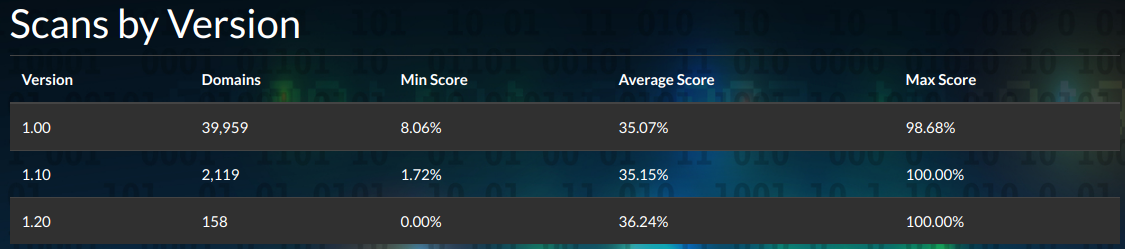

Scanner v1.20

Also, February 21st, 2020

Also, February 21st, 2020

We were still seeing the same odd bottom-value of 8.06%. Found out that our Expect-CT would give you four points if the header was missing, if you were not using HTTPS. Another small bug, we removed that and we dropped down to 1.27%.

We should find 0% and 100% in the wild, and now we do. We used to give one point for

Also fun: This site likes to chew through some top website lists on occasion. I used to manually kick these processes off, which was boring and slow. Now, I've automated the process so it will programamtically scan millions of sites a year. Spicy.

Finally, fixed a minor bug internally where

Scan often!

We should find 0% and 100% in the wild, and now we do. We used to give one point for

Content-Type being present, now you get zero unless you also have a valid charset attribute. This means that those 8.06% sites are now at a zero.Also fun: This site likes to chew through some top website lists on occasion. I used to manually kick these processes off, which was boring and slow. Now, I've automated the process so it will programamtically scan millions of sites a year. Spicy.

Finally, fixed a minor bug internally where

CURLOPT_SSL_VERIFYHOST was CURLOPT_VERIFYHOST, a non-existant flag. Most likely you won't see a change unless you are using broken cert, then we may be able to connect to you now.

Scan often!

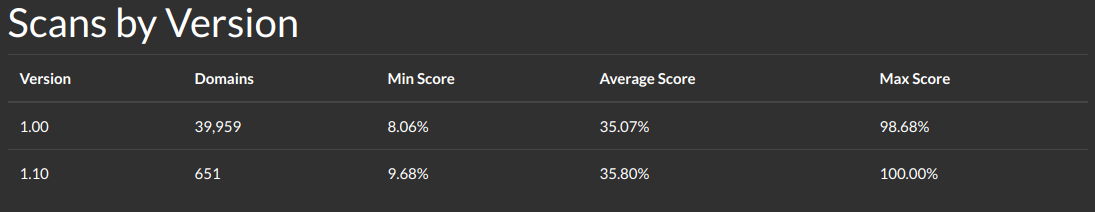

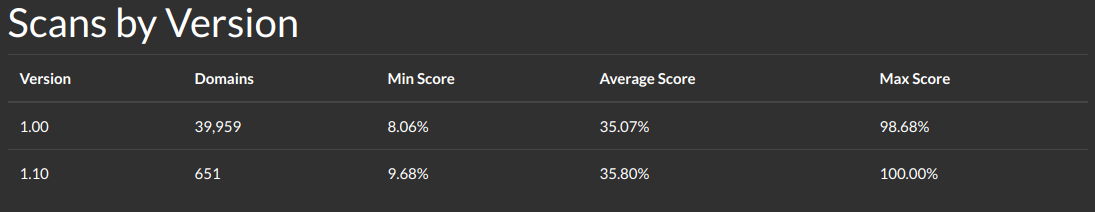

Scanner v1.10

February 21st, 2020

February 21st, 2020

Welcome to 2020! We added a contact us system, we improved our cookies to include the "SameSite" attribute (we didn't set them on our home page for a while), and we're the first site to get 100%!!!!!!!!!!

I know what you're thinking, every teacher gives themselves 100%... In this case, we took a look at our nearly 40,000 domains that have been scanned, and realized we never once gave a 100%. I figured it was a bug, but it came down to the "Server Header" module.

We strongly dislike that Apache doesn't make it logically easy to supress the header, so we used to knock you down one "point"... It was just enough to prevent anybody from hitting 100%, since apparently Apache runs the internet these days.

We removed the impact if your site says Apache... It isn't great, but I don't think this site will move the entire Apache org to supress a header.

Rescan your site with v1.10!

I know what you're thinking, every teacher gives themselves 100%... In this case, we took a look at our nearly 40,000 domains that have been scanned, and realized we never once gave a 100%. I figured it was a bug, but it came down to the "Server Header" module.

We strongly dislike that Apache doesn't make it logically easy to supress the header, so we used to knock you down one "point"... It was just enough to prevent anybody from hitting 100%, since apparently Apache runs the internet these days.

We removed the impact if your site says Apache... It isn't great, but I don't think this site will move the entire Apache org to supress a header.

Rescan your site with v1.10!

Minor Site Fix

December 9th, 2019

December 9th, 2019

Minor stuff, but still annoying: Our bootstrap nav didn't flow properly on mobile, so the icon for the menu wouldn't be visible, and even if you knew where to look, it didn't work. We're all fixed up now.

We finally dropped HTTP Public Key Pinning (HPKP) checks

November 22nd, 2019

November 22nd, 2019

Back in July 4th, we dropped any scoring weight to the HPKP module, but it took us until October 3rd to realize we had rolled that in a bit incorrectly (read old changelogs if you're interested).

At this point, browsers haven't only deprecated it, they've removed the code that made it work.

So us checking for the header and having any sort of message about it is just going to be confusing, and now that I'm working on putting together an HTTP Header Library, any point of "being complete" has went away. So I've dropped the module completely, no messages at all. I won't be iterating the scanner version since this module had no impact to your scoring as it was.

In fact, we've added a new Things that don't matter section, where we can place information about meaningless or legacy headers. These won't touch your score either. We'll let you know that this header is useless in this section now.

Added some headers as an informational if detected, that it can be removed:

At this point, browsers haven't only deprecated it, they've removed the code that made it work.

So us checking for the header and having any sort of message about it is just going to be confusing, and now that I'm working on putting together an HTTP Header Library, any point of "being complete" has went away. So I've dropped the module completely, no messages at all. I won't be iterating the scanner version since this module had no impact to your scoring as it was.

In fact, we've added a new Things that don't matter section, where we can place information about meaningless or legacy headers. These won't touch your score either. We'll let you know that this header is useless in this section now.

Added some headers as an informational if detected, that it can be removed:

- P3P

- Content-MD5

VERSION ONE RELEASED

November 14th, 2019

November 14th, 2019

This may not make a lot of sense. Now that we moved from https://secureheader.com to https://headerinspector.com, made a bunch of bugfixes, and some other big changes (See below). I've decided to dump the > 40,000 scanned domain data. This had a ton of failed request data and other information that simply didn't help me diagnose issues with the software, and didn't give users actionable information. You won't see historical data from the old site here, and that's okay. I am, however, keeping the changelog for that site below (because it's interesting).

Remember we're now at Version 1.0 for this site. All the previous changes + cURL have been rolled into this new one.

- Originally wrote an HTTP client to pull down data. This was fun and gave us some improved SSL/TLS analytics, but there's better tools out there than I can write with my limited cryptography knowledge.

- Changed HTTP Client to cURL:

- Significantly better analytics of why a request failed. We also improved how we log this so we can actually fix stuff. (If we timeout too often, we'll kick the number up for example).

- We used to ignore the scheme you'd submit, and try various "www" and bare hostname options. We still do that, but the logic has improved. We also used to track failed requests (even if it was due to our stupid rewriting of your hostname). We're going to do a better job here and only mark a domain as a failed scan after all attempts have been exhausted.

Remember we're now at Version 1.0 for this site. All the previous changes + cURL have been rolled into this new one.

SecureHeader.com Changelog follows

This stuff doesn't matter as much here -- especially version numbers.

Another Version

November 8th, 2019

Bytes the Dust!November 8th, 2019

An issue was detected in the HSTS module where sites that use HTTPS but do not set a Strict-Transport-Security header get an invalid message about their sites "not supporting HTTPS." Scores may be mildly impacted by this change. We're also working on a few sweet changes:

- Dark Theme

- Occasionally, sites would fail to properly scan. These sites would show up with a report that was blank. We're capturing these pages and showing an error message, and we plan to investigate what triggers these events.

- The EOL Software module, if triggered on both X-Powered-By and Server, would concatenate the error messages which looked gross. We also added an "underline" feature to help you see what exactly we seen when we said your product was using EOL software.

- We missed PHP/7.0 in our EOL list. We don't hit your score for EOL software, so this won't change anything. Soon, 7.1 will be EOL too (21 days). So we'll update the list then.

Started on a new name / new domain!

November 7th, 2019

November 7th, 2019

I thought of a better name for the tool, and decided it would give me a chance to work on a cleaner and more performant version of the site. I won't redirect the old site here for a bit, until I get this going 100%. Going to build some cool features under the hood (queuing and multithreading) to allow the site to handle larger loads.

New Check for End-Of-Life Software

October 7th, 2019

October 7th, 2019

You should be hiding your X-Powered-By and Server headers, but if you're not, we'll compare the versions you're leaking (if any) against our database of known deprecated/EOL/ or otherwise unsupported versions of software and we'll throw up an alert in the "bad" section. Since we already "punish" your score for leaking these headers, they currently have no impact on metrics -- so your score will stay the same as our last release.

Some More Changes...

October 5th, 2019

October 5th, 2019

Lots of small changes pushed yesterday and today:

- The headers section has been moved lower on the page, after the information blocks

- An indicator was added next to your score to show how much it has improved, or gotten worse, from the last scan

- I've built out an admin system to give me a bit more insight into how folks are using this site, how different versions are scoring and some actual analytics into failed scans (those that we can't complete if a site is down, etc)

- I've added the version of the Moz 500 sites that are showing up with your scores. They're still all at "1.00" right now, but I'm slowly getting through a stale version of the Alexa Top 1 Million Sites which will give me a lot more information

- Also: I'm tracking scan's return scores for each module I add, so I can get some good comparative intel into what sites are doing well and where some education will help.

- HSTS has no impact to sites not running on HTTPS. I used to short this out by giving 100% of points, but this was a bad approach, so expect non-HTTPS sites to slide further down the scoring.

- Cookie parameters had a minor affect on your score if you didn't have any cookies set (again, giving 100% of points). If you don't set cookies on your landing page, then your score may slightly go down to back off the erroneous points you recieved before.

- Iterated to version

3.3to reflect the fact that modules now have dynamic maximum weight scores and the score tuning mentioned here.

Minor Version Bump

October 3rd, 2019

October 3rd, 2019

We just bumped the scanner version from

v3.1 to v3.2 to address a misconfiguration issue in our HPKP module. Back in version 3.0, we removed the weight of HTTP Public Key Pinning (HPKP). For sites that did not use HTTPS, this module would be needed so it should have assigned the weighted score the same as the total score (both of which should be zero). Sadly, this module gave a "credit" of one point if you were not using HTTPS, so sites operating over HTTP would see a minimal score improvement. This issue was resolved and our scanner will be updated to ensure that the weighted score cannot exceed the modules' maximum score.

Requesting Sites Differently

July 27th, 2019

July 27th, 2019

Originally, we made a quick

HEAD request to your site, checked the headers, and reported. Some sites (like www.masterlock.com) handled methods they didn't desire correctly with a HTTP 405 Method Not Implemented. Others (like www.bluehost.com) returned a HTTP 406 Not Acceptable. I've tried a few different fixes for these sites, from updating the requiest headers to show the latest Firefox version, to changing to Accept: */*. This didn't fix the issue for the 406 sites. I then tried changing the request method from HEAD to GET and I started getting valid HTTP 200 OK responses. Because of this, I've changed the request system ongoing to use GET and I've also iterated the version of the scans from v3.0.0 to v3.1.0. Your scores may be impacted, so check often!

Major Scanning / Scoring Rewrite

July 4th, 2019

July 4th, 2019

The original version of this site focused heavily on getting the content from the site and outputting suggestions. Scoring information gamifys security and creates additional motivation to improve your scoring. The previous version of the site worked in such a way that any "good" status was 10 points, and "improve" was 5, and any "bad" was a 0. Since headers have varying importance levels, and configuration options of those headers can greatly improve or reduce the effectiveness of a header, it only made sense to allow more dynamic scoring.

Remember that your score probably will change when we make these changes. Some sites have improved in score, others have actually went down in score. We've also rebracketted the "score words" that quantify the number (Meh, Fail, etc). These have become more strict.

Rewrite Scanner

The scanner was implemented in such a way that improving the codebase was a mess. It was a demonstration piece that became useful. The entire backend of this site was rewrote to use a series of modules that can control their own point systems and output data about a header. This modularity makes improving a single module much easier and will only allow better scanning in the future.Other Changes

- Due to waning support of HPKP, this header has no impact on your site score

- Expect-CT, while technically in an expired draft, is geared to replace it. Some weight was given to this header.

- 🤷Expect-Staple seems like it might be something, but most anything about it seems to be from 2017. I won't build this one in here until I see something solid about how it should be implemented and then I can test for a way to implement it.

- We will disregard protocol provided and check HTTPS first, then HTTP. Your score will be hit pretty hard if you're only serving over HTTP

- We added require-sri to our CSP, and added Expect-CT header to our site. There's no point dispensing advice if we can't follow it!

- For those sites implementing

Content-Security-Policy-Report-Only, you will recieve a minor bump in scoring. Full scoring can be achieved by usingContent-Security-Policy. - Sites that set cookie(s) on pageload will now be checked for the

Secure(on HTTPS sites),SameSiteandHTTPOnlyflags

Future Changes

Currently, we only check that you're using specfic headers, and a few parameters within them. The goal is to build out full parameter audit as well which will give you the best idea how you can improve your security.Remember that your score probably will change when we make these changes. Some sites have improved in score, others have actually went down in score. We've also rebracketted the "score words" that quantify the number (Meh, Fail, etc). These have become more strict.